I’m Bhanuka Gamage, a final-year PhD candidate in the Inclusive Technologies Lab at Monash University. My research sits at the intersection of Augmented Reality, Human–AI Interaction, and Accessibility, where I design context-aware AR tools to empower people with Cerebral Visual Impairment (CVI).

Alongside my academic work, I’ve worked as a Senior Machine Learning Engineer with over eight years of industry experience—building and scaling AI solutions, leading cross-functional teams, and delivering user-focused products. Most recently, I led the development of a smart feeding system for pets at ilume, combining trackers and smart bowls to help fight obesity in dogs. I’ve since wrapped up my work there to focus full-time on completing my PhD.

When I’m not in the lab, I’m usually deep in the Victorian High Country on my mountain bike—chasing trails and fresh air.

The best way to reach me is via LinkedIn.

Featured Publications

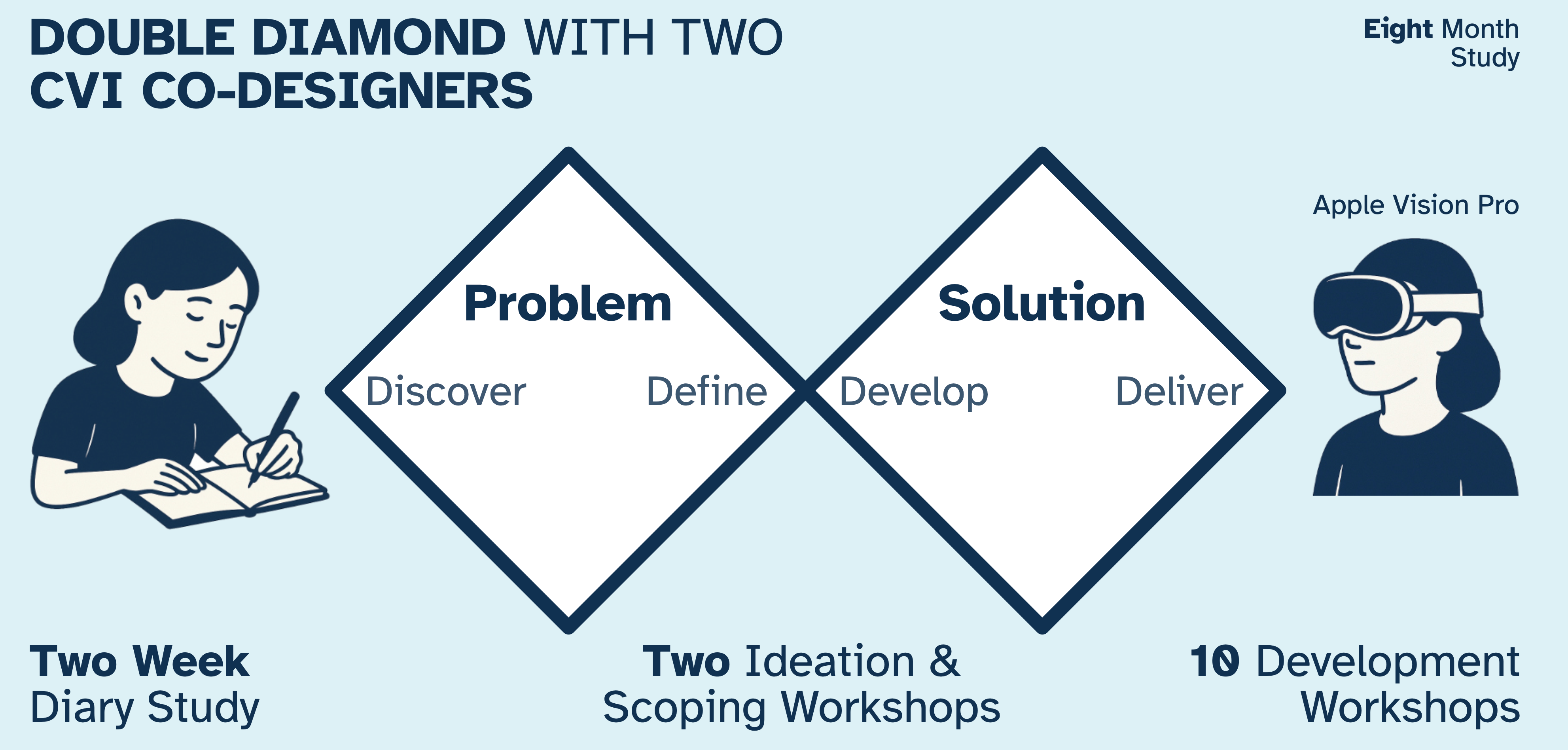

Smart Glasses for CVI: Co-Designing Extended Reality Solutions to Support Environmental Perception by People with Cerebral Visual Impairment (2025)

Bhanuka Gamage, Nicola McDowell, Dijana Kovacic, Leona Holloway, Thanh-Toan Do, Nicholas Price, Arthur Lowery, Kim Marriott

ACM SIGACCESS Conference on Computers and Accessibility (ASSETS'25)

This research explores smart glasses solutions for CVI through co-design with people who have Cerebral Visual Impairment. We develop assistive technology using extended reality to support environmental perception, demonstrating how glasses for CVI can enhance daily navigation and visual processing for individuals with brain-based vision impairments.

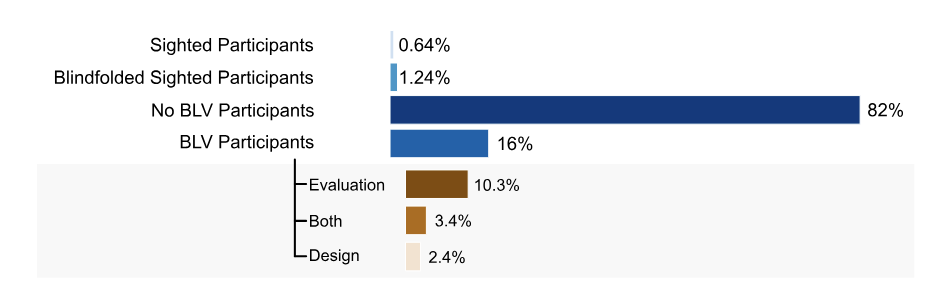

Vision-Based Assistive Technologies for People with Cerebral Visual Impairment: A Review and Focus Study (2024)

Bhanuka Gamage, Leona Holloway, Nicola McDowell, Thanh-Toan Do, Nicholas Price, Arthur Lowery, Kim Marriott

ACM SIGACCESS Conference on Computers and Accessibility (ASSETS'24)

A comprehensive review of vision-based assistive technology for people with Cerebral Visual Impairment. We examine smart glasses, assistive tech, and AI-powered solutions for CVI, identifying gaps in current technologies and presenting insights from our focus study to guide future assistive technology development for individuals with CVI.

What do Blind and Low-Vision People Really Want from Assistive Smart Devices? Comparison of the Literature with a Focus Study (2023)

Bhanuka Gamage, Thanh-Toan Do, Nicholas Seow Chiang Price, Arthur Lowery, Kim Marriott

ACM SIGACCESS Conference on Computers and Accessibility (ASSETS'23)

An investigation into what blind and low-vision people want from assistive smart devices including smart glasses and other assistive technology. Through literature analysis and a focus study, we identify key requirements for effective assistive tech solutions that address the real needs of people with vision impairments.

Recent News

-

🎉 Launching my new website

I’ve just rolled out a revamped site with featured publications, CVI research updates, and news—check it out!

-

🎙️ Round Table Speaker: Information Access for Print Disabilities

Presented my research on AR-powered Apple Vision Pro solutions designed to help individuals with Cerebral Visual Impairment read text and interact with their environment.

-

🚀 PhD Pre-submission Milestone Achieved

Successfully completed my pre-submission milestone—the final of Monash’s three major PhD checkpoints.

-

📄 CHI '24 Extended Abstracts Paper Published

Published "Broadening Our View: Assistive Technology for Cerebral Visual Impairment" at CHI '24 Extended Abstracts, highlighting the need for more assistive technology research focused on CVI.

-

🎓 ASSETS '23 Doctoral Consortium

Presented "AI-Enabled Smart Glasses for People with Severe Vision Impairments" at the ASSETS 2023 Doctoral Consortium in New York.

Achievements

Awards

Awards

-

Best Student of the Year 2020

·

Monash University Malaysia · Apr 2021

Most outstanding undergraduate academic performance in BCompSci (Honours).

-

Overall Best Graduate Award

·

Monash University Malaysia · Apr 2021

Most outstanding graduate in BCompSci (Honours) for April 2021 graduation.

-

ITEX 2021 – Gold Award

·

32nd International Invention, Innovation & Technology

Exhibition · Dec 2021

Won Gold Medal for “BaitRadar – a scalable browser extension for clickbait detection on YouTube using AI technology.”

-

Monash High Achiever Award

·

Monash University · Apr 2018

Awarded to high-achieving students across the university.

-

Global Award for Excellence – Computer Systems, Network &

Security

·

Monash College · Feb 2018

Highest marks in MCD4700 across all Monash campuses.

-

Global Award for Excellence – Engineering Mobile Apps

·

Monash College · Feb 2018

Highest mark in MCD4290 across all Monash campuses.

-

High Achieving Award in Engineering – IT

·

Monash College · Feb 2018

Awarded to the batch top in the Engineering – IT stream.

Scholarships and Grants

Scholarships and Grants

-

SIGACCESS ’23 Doctoral Consortium Travel Grant

·

SIGACCESS · Oct 2023

Selected for ASSETS 2023 Doctoral Consortium in New York—one of only 3 international PhD researchers; awarded a travel grant.

-

FIT International Postgraduate Research Scholarship

·

Faculty of Information Technology, Monash University · Mar

2022

Awarded scholarship for PhD in Computer Science (valued at ~AUD $200,000).

-

Monash Data Futures Institute PhD Scholarship

·

Monash Data Futures Institute · Mar 2022

Awarded scholarship for PhD stipend (valued at ~AUD $175,000).

Sports

Awards

Sports

Awards

-

Western Province Colours for Basketball

·

Ministry of Education, Sri Lanka · Dec 2016

Awarded for excellence in college basketball.

-

Josephian College Colours for Basketball

·

St. Joseph’s College · Oct 2016

Awarded for excellence in college basketball.

-

Merit Scholarship for School Colours

·

UCL, Sri Lanka · Feb 2017

25% scholarship for college colours in basketball.

Finalist

Finalist

-

Finalist, MSC Malaysia APICTA Competition

·

MSC Malaysia APICTA · Sep 2019

Finalist in the Tertiary Education category at the 20th APICTA competition.

Patents

-

METHODS OF PREDICTING VIDEO LINKS AS CLICKBAIT

PI 2021006742 · Filed Nov 12, 2021 · Granted May 5, 2025

A novel computer-implemented method for predicting video links as clickbait using deep learning is described. The video link’s title, thumbnail, tags, audio transcript of the video, comments and statistics are used for training the model. The title, tags, audio transcript, comments, thumbnail and statistics are inputted into a deep learning network having separate sub-networks for each attribute. The sub-network for title, tags, audio transcript, and comments involves an embedding layer and a long short-term memory layer. The sub-network for thumbnail involves a convolutional neural network. The outputs are merged through an average operator; each sub-network handles a different modality. The weight of the video link as clickbait is determined by the deep learning model.

Education

- PhD Computer Science Monash University 2022-2025 (expected)

- BCompSci (Honours) Monash University Malaysia 2017-2020

Experience

- Data Engineer Human Health 2025–present

- Senior Machine Learning Engineer ilume 2022–2025

- Head of Artificial Intelligence Staple 2021-2022

- Machine Learning Engineer Map72 2019-2021